Anqi SHI1, Hanmo WANG1, Jihao LUO2, Christian PECAUT3, Kaizen LOW3, Stephen En Rong TAY1, and Alexander LIN1,*

1Department of the Built Environment, College of Design and Engineering, National University of Singapore (NUS)

2Department of Information Systems and Analytics, School of Computing (SOC), NUS

3 AI Centre for Educational Technologies (AICET), NUS

Sub-Theme

Others

Keywords

Large Language Model (LLMs), AI-supported scaffolding, autonomous learning, relational reconstruction in education, human-AI interaction

Category

Lightning Talks

Introduction

In higher education, where transferable competencies and interdisciplinary problem-solving are emphasised (Nakakoji & Wilson, 2020; Schijf et al., 2025), many students struggle with metacognitive regulation (Zimmerman, 2002), problem-posing (Mishra & Iyer, 2015), and knowledge integration (Ronen & Langley, 2004). Structured scaffolding, such as guided questioning, can foster deeper inquiry and reflection, yet applications remain mostly in visual learning analytics and seldom extend to inquiry-based or reflective contexts (Yan et al., 2025). Large Language Models (LLMs), such as ChatGPT, show potential in offering personalised guidance. When embedded in well-designed activities, LLMs can shift the educators from information provider to facilitators of inquiry (Lin et al., 2024). Unguided use, however, risks superficial learning, technological dependency, and inhibited critical thinking (Gerlich, 2025), while high interaction volumes make manual review impractical.

Approach

To address this challenge, this study developed the Guided Inquiry and Dialogue Education System (GuIDES), an online learning module hosted on the ScholAIstic platform and co-developed with the AI Centre for Educational Technologies (AICET) at the National University of Singapore (NUS). GuIDES was implemented in PF2203 “Quality and Productivity Management” with ethical approval (Ref. L2024-01-01). It integrates large language models’ (LLMs) generative and evaluative functions with a scaffolding framework to improve questioning, critical thinking, and reflection.

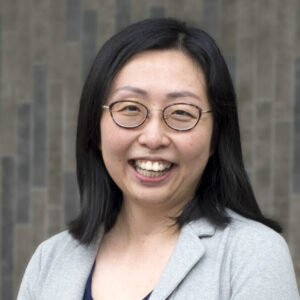

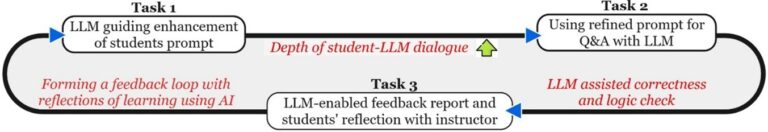

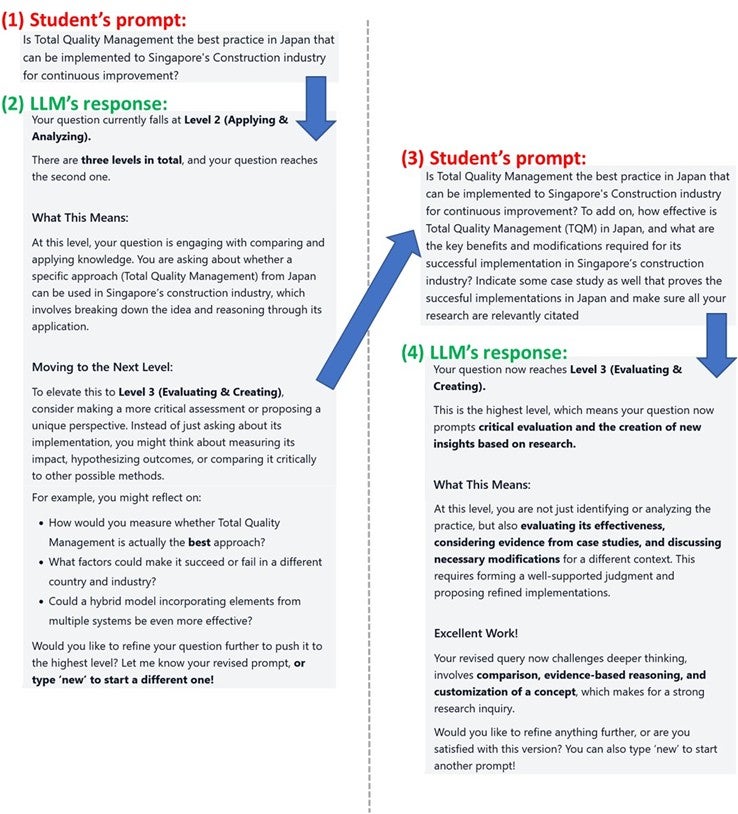

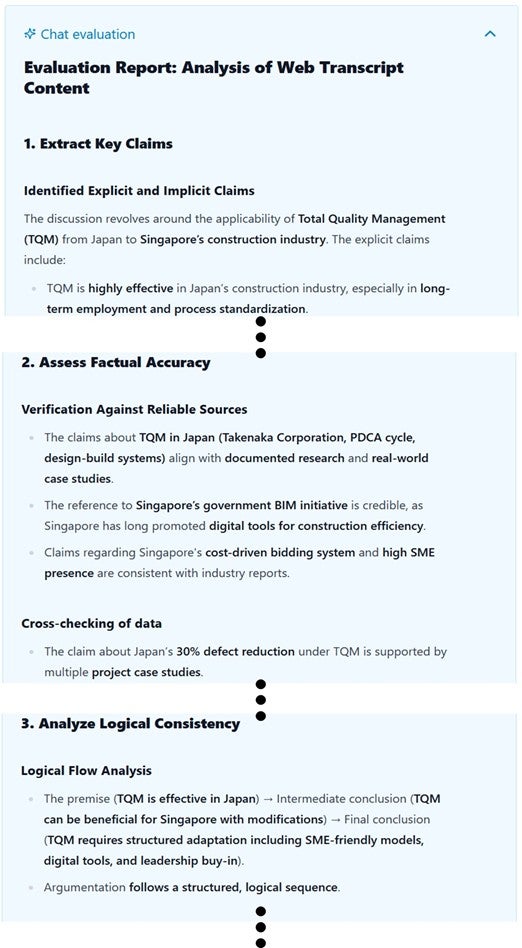

GuIDES follows a three-phase structure (Figure 1). In Task 1 (Question/Prompt Refinement), students design prompts with LLM support, refined through Costa’s Levels of Questioning (Costa, 1985), advancing from factual to analytical and evaluative inquiry (Figure 2). In Task 2 (Guided Dialogue), students use the prompts refined in Task 1 to engage in deeper Q&A dialogues with the LLM, which then conducts targeted online research (Figure 3). In Task 3 (Feedback and Reflection), another LLM module evaluates coherence and accuracy of the Q&A, generating structured feedback (Figure 4) that students use in reflective essays (Figures 5 and 6) to reinforce metacognitive regulation.

.

Results and Discussion

Deployment of GuIDES showed clear improvements in student engagement and learning outcomes. In group presentations, students used GuIDES to compare prefabrication practices in the UK and Singapore, producing slides with actionable improvements for local practices (Figure 7). Deployment of GuIDES showed clear improvements in student engagement and learning outcomes. In group presentations, students used GuIDES to compare prefabrication practices in the UK and Singapore, producing slides with actionable improvements for local practices (Figure 7).

.

.

.

.

.

.

To evaluate the effectiveness, a quasi-experimental design compared three learning modes across three academic years of PF2203: traditional web search (control group in Year 1), direct ChatGPT use (Year 2), and structured GuIDES use (Year 3). It is quasi-experimental because this study followed course cohorts rather than random grouping. At the same time, consistent course content and marking schemes ensured comparability (Tang & Hew, 2022).

Project scores (Table 1), evaluated with the Table 2 scheme, showed GuIDES cohort outperformed the controls by 15.8-22.6%. End-of-course surveys showed GuIDES outperformed ChatGPT and control groups, especially in Authentic Learning (Table 3), and scored higher in cross-disciplinary integration, authentic engagement, and motivation when comparing LLM-specific use (Table 4). An non-graded quiz without platform access (Table 5) confirmed internalisation, with scores improving by 15.6% with ChatGPT, and 20.2% with GuIDES. Presentation performance, survey, and ungraded quiz indicated competencies retained and transferable beyond the tool. Qualitative feedback reinforced this: students noted that GuIDES’s suggestions for improved questioning deepened critical thinking and analysis.

Averaged presentation scores (For each semester, number of student groups = 4; Maximum score for each presentation is 20)

.

Marking scheme for project presentation

.

Comparison of results from end-of-course surveys using a five-point Likert scale, where 5 represents "Strongly Agree" and 1 represents "Strongly Disagree"

.

Comparison of results on LLM tool usage from end-of-course surverys (Five-point Likert scale: 5 = "Strongly Agree," 1 = "Strongly Disagree")

.

Scores from non-graded self-learning quizzes

(Results from unattempted questions and from incomplete assessments are excluded from the analysis at the question level and the exam-taker level, respectively)

.

Reflection (Figures 5 and 6) also revealed enhanced self-monitoring and higher-order cognitive skills. These qualitative insights, increased survey/quiz scores, and improved project scores provide triangulated evidence of effectiveness. Notably, the educator’s role shifted from step-by-step guidance to overseeing inquiry scaffolding and reflection on LLM usage, enabling students to become independent, critical users of AI tools.

Conclusion and Significance

GuIDES represents a paradigm shift—from teacher-led knowledge delivery to student-driven inquiry with intelligent scaffolding. It redefines the relationship among learners, knowledge, technology, and educators, promoting a future-ready model of autonomous learning.

References

Costa, A. L. (1985). Developing minds: A resource book for teaching thinking. ERIC.

Gerlich, M. (2025). AI Tools in society: Impacts on cognitive offloading and the future of critical thinking. Societies, 15(1), 6. https://doi.org/10.3390/soc15010006

Lin, A., Shi, A., & Tay, S. E. R. (2024). Enhancing educational outcomes in quality and productivity management through ChatGPT integration [Paper presentation]. In Higher Education Conference in Singapore (HECS) 2024, 3 December, National University of Singapore. https://blog.nus.edu.sg/hecs/hecs2024-alin-et-al/

Mishra, S., & Iyer, S. (2015). An exploration of problem posing-based activities as an assessment tool and as an instructional strategy. Research and practice in technology enhanced learning, 10, 1-19. https://doi.org/10.1007/s41039-015-0006-0

Nakakoji, Y., & Wilson, R. (2020). Interdisciplinary learning in mathematics and science: Transfer of learning for 21st-century problem solving at university. Journal of Intelligence, 8(3), 32. https://doi.org/10.3390/jintelligence8030032

Ronen, M., & Langley, D. (2004). Scaffolding complex tasks by open online submission: Emerging patterns and profiles. Journal of Asynchronous Learning Networks, 8(4), 39-61. https://doi.org/10.24059/olj.v8i4.1809

Schijf, J. E., van der Werf, G. P., & Jansen, E. P. (2025). The relationship between first-year university students’ characteristics and their levels of interdisciplinary understanding. Innovative Higher Education, 1-22. https://doi.org/10.1007/s10755-025-09798-w

Tang, Y., & Hew, K. F. (2022). Effects of using mobile instant messaging on student behavioral, emotional, and cognitive engagement: a quasi-experimental study. International Journal of Educational Technology in Higher Education, 19(1), 3. https://doi.org/10.1186/s41239-021-00306-6

Yan, L., Martinez-Maldonado, R., Jin, Y., Echeverria, V., Milesi, M., Fan, J., Zhao, L., Alfredo, R., Li, X., & Gašević, D. (2025). The effects of generative AI agents and scaffolding on enhancing students’ comprehension of visual learning analytics. Computers & Education, 105322. https://doi.org/10.1016/j.compedu.2025.105322

Zimmerman, B. J. (2002). Becoming a self-regulated learner: An overview. Theory Into Practice, 41(2), 64-70. https://doi.org/10.1207/s15430421tip4102_2