CHEW Lup Wai, and Stephen TAY En Rong

Department of the Built Environment, College of Design and Engineering (CDE), National University of Singapore (NUS)

Sub-Theme

Others

Keywords

Peer learning, student-generated questions, assessment, student performance

Category

Lightning Talks

Context

This study attempts to evaluate the impact of scenario-based student generated questions and answers (sb–SGQA) by comparing the student performance and variability in two cohorts of PF3105 “Research Methods”, a core course for students in the B.Sc. Project and Facilities Management undergraduate programme. The sb-SGQA approach involves students creating questions from real-life scenarios and suggest for answers (Chin & Osborne, 2008). This approach allows students to connect professional experiences with academic content and enhances their problem-solving and analytical skills (Yu & Wu, 2020). The sb-SGQA approach has been employed in courses in College of Design and Engineering (Du & Tay, 2022; Tay, 2022) and College of Humanities and Sciences (Tay & Liu, 2023) in the National University of Singapore (NUS).

Methodology

The assessment comprises 40% continual assessment (CA) and 60% final exam. In the Academic Year (AY) 2023/24 cohort with sb–SGQA, the CA, with four to six students in a group, consists of 1) 30% for a group research project and 2) 10% for the sb–SGQA group assignment. The sb–SGQA assignment is conducted within the same assignment group, but is independent from the group research project, which has a different topic. In AY 2024/25 cohort without sb–SGQA, the group research project carries the full 40% for the CA. In both cohorts, the final exam has the same format with 60 marks. The same instructor set the questions and graded the exams and continual assessments in both cohorts.

Results and Discussion

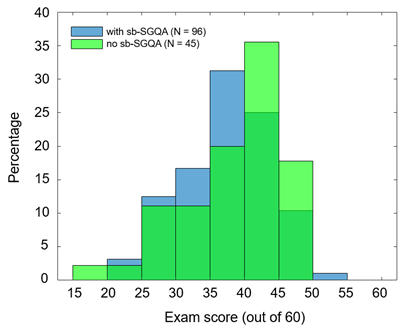

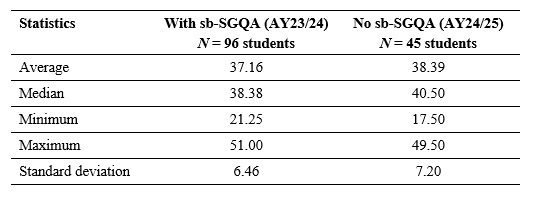

Figure 1 shows the distributions of the final exam scores between the two cohorts, while descriptive statistics is summarised in Table 1. In the AY 2023/24 intervention cohort, none of the students scored in the 15-20 band and one student in the 50-55 band. In the AY 2024/25 control cohort, there is one student in the 15-20 band and no student in the 50-55 band. While the average (37.16 vs 38.39, not statistically significant) and median (38.38 vs 40.50) are similar, both the minimum (21.25 vs 17.50) and the maximum (51.00 vs 49.50) scores are higher in the AY 2023/24 intervention cohort (Table 1). This suggests that the sb-SGQA may help weaker students, and statistical significance could be established with additional cohort data. The standard deviation is lower in the AY 2023/24 cohort with sb-SGQA (6.46 vs 7.20), suggesting that sb-SGQA helps reduce the spread of student performance, possibly by helping weaker students to learn more effectively and thereby increasing the minimum scores.

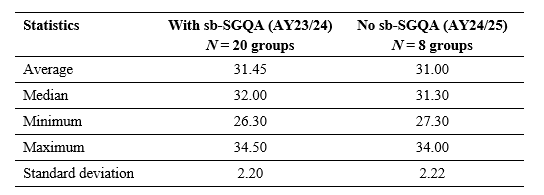

Table 2 compares the statistical results of the group assignment of the two cohorts, with an average of 31.45 and 31.00. As there are only eight groups in the AY 2024/25 cohort, it is not meaningful to plot the distribution of the scores. While improvement was observed for the final exam, the impact on group assignments was not significant. The similarity in scores for the group assignment could be attributed to other factors such as group dynamics.

Both cohorts have 60 marks as the possible full mark.

.

Descriptive statistics of the final exam score. Both cohorts have 60 marks as the possible full mark

.

Descriptive statistics of the group assignment score. Both cohorts have 40 marks as the possible full mark

.

Conclusion and Significance

This study compares student performance between two cohorts and found that the AY 2023/24 cohort, which includes sb-SGQA, has no students in the lowest score band (15-20 marks) and one student in the highest band (50-55 marks), suggesting that sb-SGQA may support weaker students’ performance. Although the cohorts’ scores are not statistically significant (p-value of 0.317), the AY 2023/24 cohort shows higher minimum and maximum scores, potentially indicating the positive impact of sb-SGQA on weaker students. Moreover, the lower standard deviation in AY 2023/24 (6.46 vs 7.20) suggests that sb-SGQA might reduce performance variability by helping weaker students learn more effectively. However, the impact of sb-SGQA on the group assignment was found to not be significant, which could be affected by group dynamics.

References

Chin, C., & Osborne, J. (2008). Students’ questions: a potential resource for teaching and learning science. Studies in Science Education, 44(1), 1-39. https://doi.org/10.1080/03057260701828101

Du, H., & Tay, E. R. S. (2022). Using scenario-based student-generated questions to improve the learning of engineering mechanics: A case study in civil engineering. In Higher Education Campus Conference (HECC) 2022, 7-8 December, National University of Singapore (pp. 84-88). https://ctlt.nus.edu.sg/wp-content/uploads/2024/10/ebooklet-i.pdf

Tay, E. R. S. (2022). Efficacy of scenario‐based student-generated questions in an online environment during COVID-19 across two modules. In Higher Education Campus Conference (HECC) 2022, 7-8 December, National University of Singapore (pp. 220-224). https://ctlt.nus.edu.sg/wp-content/uploads/2024/10/ebooklet-i.pdf

Tay, E. R. S., & Liu, M. H. (2023). Exploratory implementation of scenario-based student-generated questions for students from the humanities and sciences in a scientific inquiry course. In Higher Education Campus Conference (HECC) 2023, 7 December, National University of Singapore. https://blog.nus.edu.sg/hecc2023proceedings/exploratory-implementation-of-scenario-based-student-generated-questions-for-students-from-the-humanities-and-sciences-in-a-scientific-inquiry-course/

Yu, F. Y., & Wu, W. S. (2020). Effects of student-generated feedback corresponding to answers to online student-generated questions on learning: What, why, and how? Computers & Education, 145, 103723. https://doi.org/10.1016/j.compedu.2019.103723