SOO Yuen Jien1,2, Verily TAN1,*, TAN Chun Liang3, LIU Mei Hui1,4, and HE Yiyang5

1Centre for Teaching, Learning, and Technology (CTLT)

2School of Computing (SOC), National University of Singapore (NUS)

3Department of Architecture, College of Design and Engineering (CDE), NUS

4Department of Food Science and Technology, Faculty of Science (FoS), NUS

5Department of Sociology and Anthropology, Faculty of Arts and Social Sciences (FASS), NUS

Sub-Theme

Others

Keywords

GenAI, activities, assessment, design, human-AI matrix

Category

Paper Presentation

This presentation presents insights from a faculty Professional Development (PD) initiative that used the Human–AI Matrix (Dev Interrupted, 2023; Fragiadakis et al., 2025; Koh et al., 2023; Pearson & Affias, 2025) as a reflective scaffold to support the intentional integration of Generative AI (GenAI) into various course design aspects, such as teaching and learning activities, assessment, and content delivery. Although this matrix has not been explicitly used in education nor empirically proven, it was introduced as a design-thinking heuristic rather than a prescriptive model. For the professional development of faculty, the matrix helped educators understand the relationship between human and Artificial Intelligence (AI) efforts in learning activities and assessment.

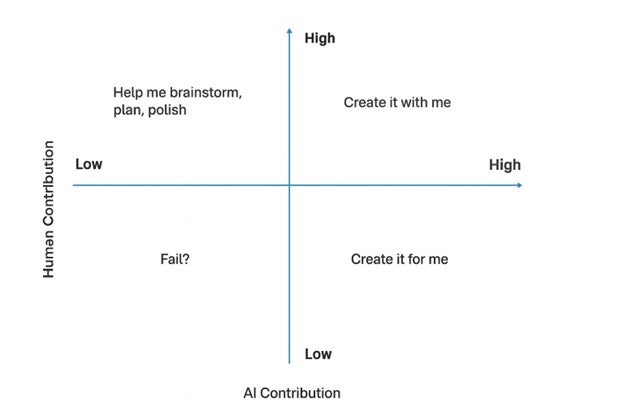

(adapted from Dev Interrupted, 2023; Liu & Bridgeman, 2023)

The matrix maps instructional tasks along two intersecting axes: human contribution vs. AI contribution. This yields four quadrants: (1) low AI, low human (undesired); (2) low AI, high human (“help me brainstorm, plan, polish”); (3) high AI, low human (“create for me”); and (4) high human, high AI (“create with me”). These range from traditional, human-driven learning to fully AI-automated outputs (Liu & Bridgeman, 2023). It was used in our professional development as a conversational and design scaffold—one that allowed faculty to interrogate not just how AI was being used, but why. This included considerations about AI contribution through automation, efficiency, support, and creativity, and human contribution in the form of ownership, skills application, engagement, and decision-making.

The two-day PD workshop engaged 15 faculty participants—six from STEM and nine from non-STEM disciplines. Participants represented fields such as Language and Communication Studies, Media and Communication Studies, Geography, Engineering Design, Electrical Engineering, Computer Science, Medical Ethics, and Finance. Participants were given 1.5 additional weeks to submit an AI-integrated activity or assessment design via the Canvas learning management system, using a provided template.

The two-day PD workshop engaged 15 faculty participants—six from STEM and nine from non-STEM disciplines. Participants represented fields such as Language and Communication Studies, Media and Communication Studies, Geography, Engineering Design, Electrical Engineering, Computer Science, Medical Ethics, and Finance. Participants were given 1.5 additional weeks to submit an AI-integrated activity or assessment design via the Canvas learning management system, using a provided template.

To illustrate the utility of the matrix, a number of artifacts will be showcased that applied diverse instructional strategies across disciplines. This is based on the evaluation of submissions by a faculty member and an academic developer. It was observed that almost all participants considered their assignments to fall within the “High-Human, High-AI,” “High-Human, Low-AI,” and “Low-Human, High-AI” spectrum and tried to ensure substantial human contribution in the assignments. Additionally, faculty avoided fully AI-generated outputs; for example, image-based assignments for students were often situated in specific contexts requiring them to interpret and apply knowledge. Faculty chose to use AI as a scaffold (e.g., to provide initial scaffolding for report writing) or as a generator of raw data (e.g., generate datasets for students to analyse). Much emphasis was placed on human-led interpretation, critique, and refinement (e.g., students were expected to work independently and to demonstrate their understanding via separate tests, requiring manual refinement, citation, or critique of the generated content).

Hands-on engagement, reflection, peer discussion, and critical analysis were featured in many of the submissions. This holds similarities to the principles for GenAI design of activities and assessment by Nguyen et al. (2025). Exemplars will be showcased and discussed as part of the presentation.

Although there are indications that the matrix is accessible and adaptable, some participants noted its limitations. Specifically, there were comments that it could be too static and one-dimensional, hence lacking in granularity for in-depth design and planning. The framework is undergoing refinements in response to this feedback. In response, we have created a template that scaffolds participants in the planning of the activities. The design phases are broken into different stages of the activity or assessment. This helps faculty visualise how students will be interacting with GenAI.

References

Dev Interrupted. (2023, September 13). The Human–AI collaboration matrix, created by Dr. Philippa Hardman, is a fantastic framework for thinking about how we can use AI in our work [LinkedIn post]. LinkedIn. https://www.linkedin.com/pulse/human-ai-collaboration-matrix-created-dr-philippa-hardman-dev-interrupted

Fragiadakis, G., Diou, C., Kousiouris, G., & Nikolaidou, M. (2025). Evaluating human–AI collaboration: A review and methodological framework. arXiv. https://doi.org/10.48550/arXiv.2407.19098

Koh, J., Cowling, M., Jha, M., & Sim, K. N. (2023). The human teacher, the AI teacher and the AIed-teacher relationship. Journal of Higher Education Theory and Practice, 23(17), 142–152. https://doi.org/10.33423/jhetp.v23i17.6543

Liu, D., & Bridgeman, A. (2023). ChatGPT is old news: How do we assess in the age of AI writing co-pilots? The University of Sydney. https://educational-innovation.sydney.edu.au/teaching@sydney/chatgpt-is-old-news-how-do-we-assess-in-the-age-of-ai-writing-co-pilots/

Nguyen, A., Duong, A. T., Nguyen, D. T. B., Lai, V. T. T., & Dang, B. (2025). Guidelines for learning design and assessment for generative artificial intelligence-integrated education: A unified view. Information and Learning Sciences. https://doi.org/10.1108/ILS-11-2024-0148

Pearson, B. L. & Affias, O. (2025, April 4). The matrix that makes your AI strategy make sense. Rethinking developer productivity in the age of agentic AI. Dev Interrupted. https://devinterrupted.substack.com/p/the-matrix-that-makes-your-ai-strategy