LEK Hsiang Hui

Department of Information Systems and Analytics, School of Computing (SOC), National University of Singapore (NUS)

Sub-Theme

Building Learning Relationships

Keywords

Student engagement, large language models (LLMs), learning analytics, scaffolding, differentiated instruction

Category

Lightning Talk

Introduction

As digital learning environments become ubiquitous, platforms like Microsoft Teams have emerged as central hubs for teacher-student interaction. These platforms generate a continuous stream of rich, unstructured dialogue data, offering an unprecedented opportunity for adaptive learning and personalised support. However, this high level of accessibility presents a critical pedagogical challenge: how do we assess the quality of the engagement and foster better student learning. This paper presents a systematic and scalable framework that addresses this concern by leveraging the Microsoft Graph API and Large Language Models (LLMs) to analyse interaction patterns and inform targeted pedagogical strategies. The objective is to move beyond mere availability and cultivate high-quality engagement that leads to demonstrably better learning outcomes, as advocated by Kuh (2009).

Methodology

The framework consists of a two-phase process for data collection and analysis.

- Automated Data Collection via Microsoft Graph API: To systematically gather interaction data, we wrote a few python scripts which utilise the Microsoft Graph API. By authenticating a service principal with the necessary permissions, we execute automated calls to the GET /v1.0/me/chats and /v1.0/chats/{conversation.id}/messages endpoints. This returns a comprehensive JSON object of the entire chat history for an instructor with all his/her recipients, which effectively export all the chat messages programmatically. These JSON objects are then converted into CSV format which are then fed into a LLM. The fields that are finally used for analysis include: id, createdDateTime, fromDisplayName, and content. Entries which are not the “message” messageType are ignored.

- Multi-Stage Analysis with Large Language Models: The structured chat data is then processed using a Large Language Model. Google Gemini 2.5 Pro was used for this study and has proven to be able to serve this purpose through a series of carefully designed prompts to analyse both the content and context of interactions.

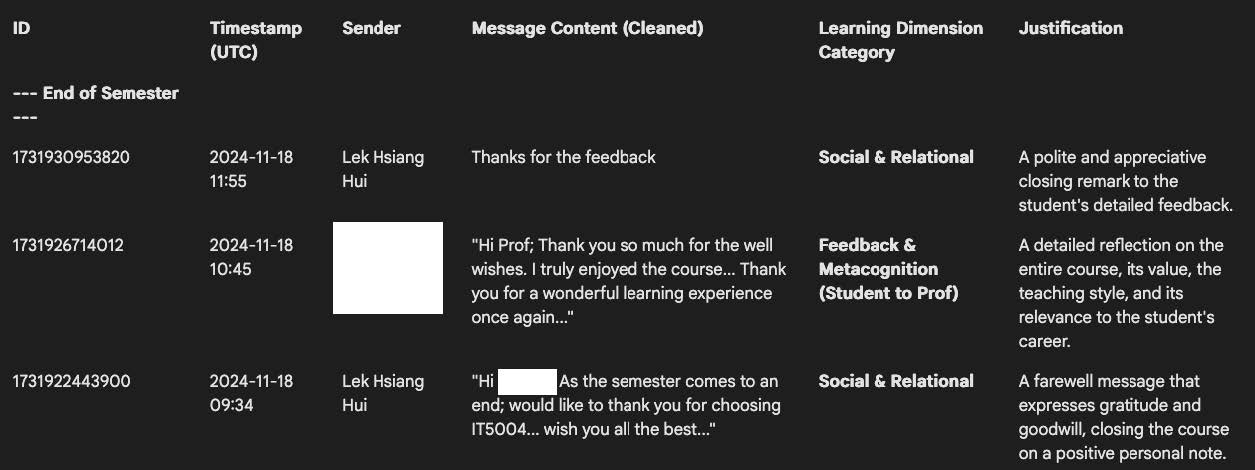

a. Message Classification: Each message is classified into one of the high level categories such as Conceptual Clarification & Q&A, Feedback & Instruction (Prof to Student), Feedback & Metacognition (Student to Prof), Course Administration & Logistics, Social & Relational, and Simple Acknowledgment which provide a quantitative overview of the dialogue’s nature (Figure 1).

.

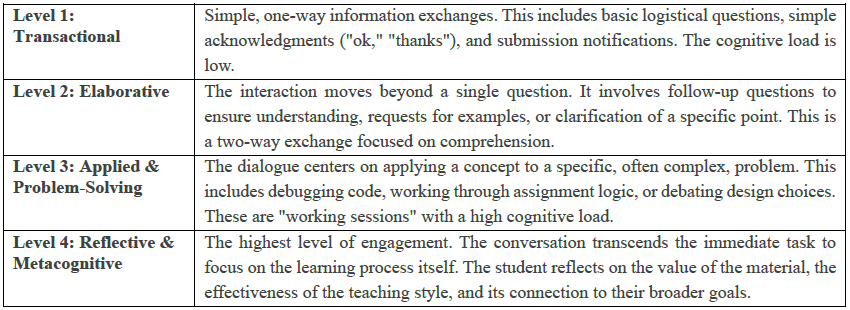

b. Interaction Depth Analysis: The model is then prompted to analyse entire conversational threads to assess their cognitive depth, classifying them on a four-level scale from Transactional to Reflective & Metacognitive (Table 1). This reveals the quality of the engagement beyond simple frequency.

.

Description of the interaction depth levels

.

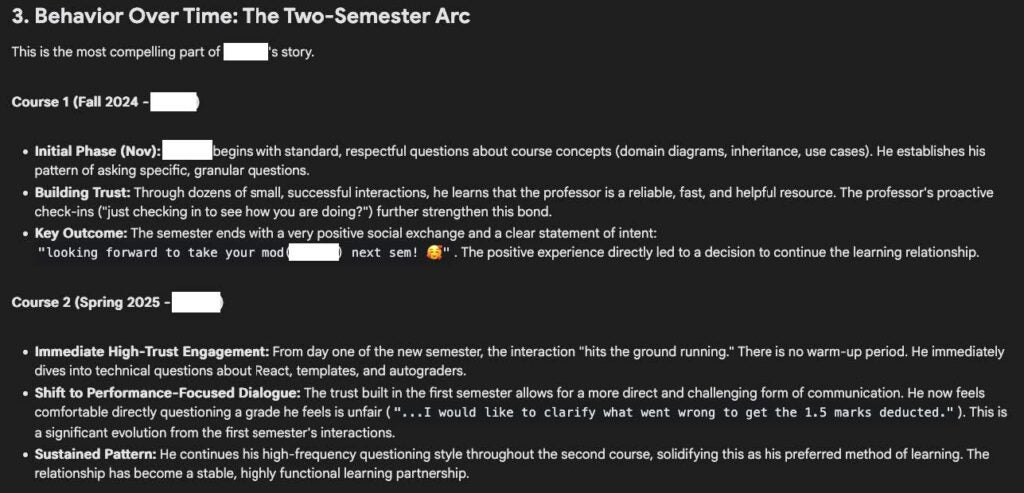

c. Longitudinal Pattern Recognition: For students who are taking courses over multiple semesters, it is possible for the LLM to analyse each student’s complete history to identify recurring patterns and learner archetypes, such as the Iterative Clarifier or the Problem-Focused Advocate and provide a summary of the behaviour over time (Figure 2).

.

Strategies for Enhancing Student Learning Outcomes

The analytical results inform a set of data-driven strategies designed to provide optimal scaffolding, cultivate interest, and promote independent learning.

- Optimal Scaffolding: Informed by the principles of Wood, Bruner, and Ross (1976), the level of support is tailored to the analysed interaction depth. A transactional query receives a direct answer, while an elaborative or problem-solving query prompts a Socratic response (“What have you tried?”) to guide the student’s thinking process. This intentional fading of support builds student confidence and capability.

- Cultivating Interest in Learning: By identifying moments of deep engagement or conceptual struggle, the instructor can transform routine questions into “teachable moments.” For example, a dialogue flagged as Applied Problem-Solving can be leveraged to discuss underlying design principles or real-world applications, connecting the task to broader concepts and sparking genuine intellectual curiosity, which is a key component of effective feedback (Hattie & Timperley, 2007).

- Fostering Independent Learning: The ultimate goal is to use the data to empower students. When analysis reveals a student is overly reliant on affirmation, the instructor can deliberately shift to more probing, open-ended questions. By tracking subsequent interactions, the instructor can measure the development of the student’s self-reliance and problem-solving skills over time.

.

Conclusion

This paper demonstrates a robust and replicable methodology for turning unstructured MS Teams chat data into a powerful tool for pedagogical reflection and action. By using the Graph API and LLMs, educators can move beyond anecdotal evidence to systematically analyse and enhance their engagement practices. This framework proves that extensive digital interaction, when guided by data and pedagogical intent, can be a springboard for developing resilient, independent learners, directly countering concerns about fostering dependency.

.

References

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. https://doi.org/10.3102/003465430298487

Kuh, G. D. (2009). What student affairs professionals need to know about student engagement. Journal of College Student Development, 50(6), 683-706. https://doi.org/10.1353/csd.0.0099

Wood, D., Bruner, J. S., & Ross, G. (1976). The role of tutoring in problem solving. Journal of Child Psychology and Psychiatry, 17(2), 89–100. https://doi.org/10.1111/j.1469-7610.1976.tb00381.x